ImmerseGuard: Bridging Immersion and Environmental Awareness

In an era where our daily lives are constantly surrounded by technology, ensuring the harmony between digital immersion and environmental awareness has become more crucial than ever. Recent advancements in noise-cancelling technologies have significantly enhanced our auditory experiences, allowing us to enjoy immersive digital content without the distraction of background noise. However, this isolation from our environment comes with its own set of challenges, particularly in maintaining our awareness of important auditory cues around us.

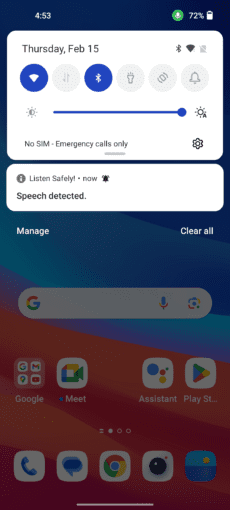

The conceptual framework of our proposed system is illustrated in this figure. Users may active ANC in different scenarios, including while walking in the street, or doing a task in home, or while using immersive technologies (such as virtual reality to get full immersion). Our system analyze the input audio of headphones in real-time and upon detecting a sound event, alerts users via text/voice notification.

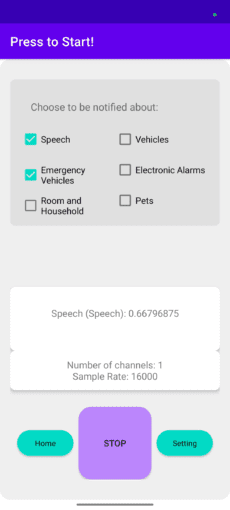

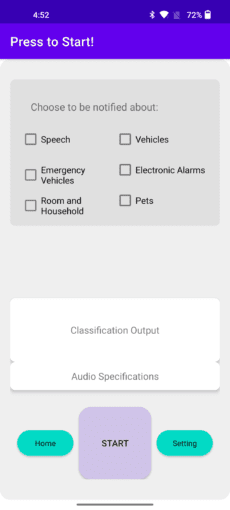

Meet ImmerseGuard, a groundbreaking application that embodies a solution to this challenge by integrating an on-device sound event detection system with active noise-cancelling (ANC) technologies. ImmerseGuard leverages the power of model compression to efficiently run on smartphones, providing users with real-time alerts for critical sound events without compromising their immersive auditory experience.

At the core of ImmerseGuard’s functionality is the innovative use of YAMNet, a model known for its efficiency in sound event detection on edge devices. The integration of YAMNet with a shallower model, enhanced through transfer learning, enables ImmerseGuard to identify a wide range of sound events such as emergency vehicle sirens, general vehicle noises, various room and household sounds, human speech, pet sounds, and device alarms. To optimize the model for deployment on platforms with limited resources, we utilized quantization techniques, allowing for efficient real-time inference times and the capability to run the model locally without the need for an internet connection.

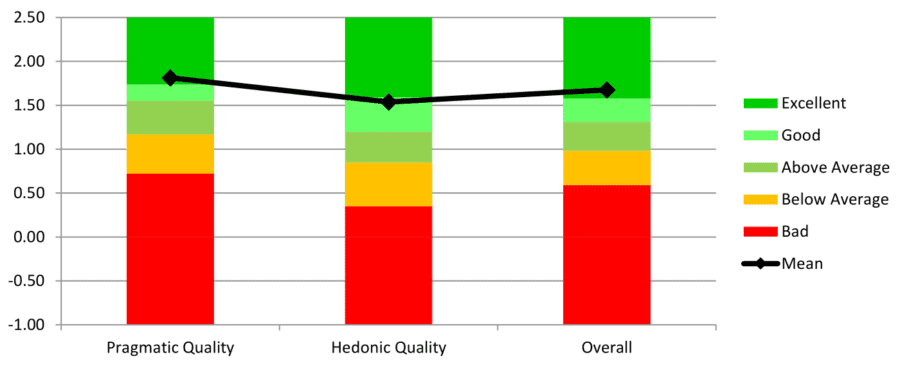

User experience studies conducted with ImmerseGuard have shown notably positive results, indicating its practicality as a tool in enhancing environmental awareness while users are immersed in digital content. Participants in our study appreciated the seamless integration of the app into their smartphones, highlighting the app’s ability to alert them to critical sound events without the need for downloading heavy files or models.

Despite the enthusiasm for ImmerseGuard’s capabilities, our journey doesn’t stop here. Acknowledging the limitations of our initial studies, we are committed to further refining and enhancing ImmerseGuard’s functionality. Future explorations will focus on expanding the application’s versatility and adaptability across a wider range of devices and real-world environments. Additionally, incorporating user feedback, we aim to further personalize the user experience by adding more specific sound event classifications and customizable notification features.

In conclusion, ImmerseGuard represents a significant step forward in our quest to balance the benefits of immersive digital content with the need to stay connected to our surroundings. By harnessing the power of model compression and on-device machine learning, we are paving the way for a future where technology enhances not only our digital experiences but also our safety and awareness of the world around us.

You can download the full-text paper here.