Check out my ResearchGate and Google Scholar!

My Work Experiences

This diagram presents a unique snapshot of my career, emphasizing the diverse skillsets I’ve cultivated and the experiences I’ve amassed over the years. Each flow represents an area of expertise or experience, beginning from the institutions where I gained these skills and leading to the specific areas of focus.

Virtual Reality (VR)

Virtual reality is a fascinating field that lies at the intersection of technology, design, and human perception. My work in VR has spanned various aspects, including creating immersive experiences, developing games, and exploring therapeutic applications. One of my primary interests in this field is understanding and optimizing the user experience in VR environments. This involves not only technical and design skills, but also a deep understanding of human psychology and sensory processing. I’ve mixed NLP and VR to create AI-powered avatars that could carry natural conversations with users

Machine Learning (ML)

Machine Learning is a powerful tool that has revolutionized countless industries, from tech to healthcare, finance, and beyond. My expertise in ML ranges from traditional techniques such as regression, classification, and clustering to advanced areas like deep learning, neural networks, and dimensionality reduction. I have also worked on multi-modal ML and data fusion concepts, which involve integrating information from multiple sources or types of data. My work at DREAM BIG Lab, Lady Davis Institute at JGH has allowed me to apply these skills in real-world projects.

Data Science

Data science is the backbone of informed decision-making in businesses and organizations. My proficiency in data science encompasses visualization, statistical analysis, and data cleaning and preparation. Visualization and statistical analysis enable me to uncover insights from data and communicate them effectively. Meanwhile, proficiency in data cleaning and preparation ensures that the data feeding into ML models or analysis tools is accurate and relevant. My work at LDI is a testament to my expertise in Data Science.

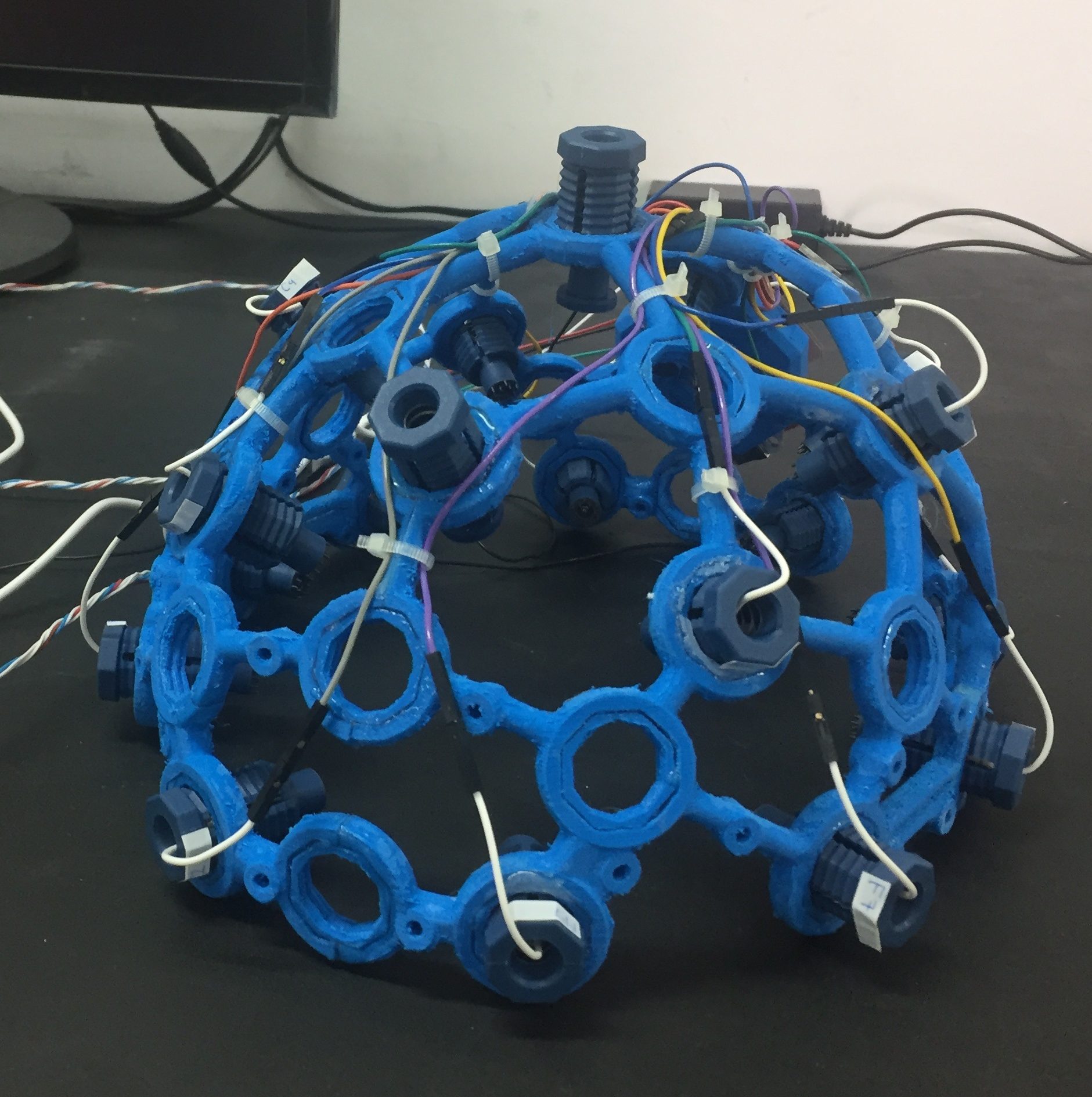

Physiological Computing

Physiological computing involves using technology to interact with human biological systems. My work in this field has covered a variety of aspects, including biofeedback, wearable technology, human-computer interaction, health monitoring, and multi-modal signal processing. Biofeedback and health monitoring involve interpreting signals from the body to understand a person’s physiological state, while human-computer interaction and wearable technology focus on how humans can interact with technology in a seamless, intuitive way.

Portfolio of NLP-based projects!

SmartBib

Simplify your research by filtering relevant papers from your BibTeX files. Save time and ensure accuracy in creating your bibliography. Perfect for students, scholars, and researchers to streamline their work.

McGillian GreenQuest

Learn about sustainability at McGill University with McGillian GreenQuest. This smart AI tool gives you all the info on eco-friendly living and campus initiatives. Get quick answers on recycling, saving energy, and green transport from an easy-to-use platform.

Fill blanks!

This app makes filling out forms easy using AI. Users provide a template with blanks, and the AI creates questions to complete it. This method ensures accurate and personalized answers, perfect for automating form-filling.

Among all the AI-powered project that I have done, few of them have leveraged NLPs power. For example, I created SmartBib to filter out relevant papers put of thousands of papers. This makes life easier for writing literature reviews. McGillian GreenQuest, on the other hand, is a chatbot that informs users about their questions over sustainability initiatives in McGill university. Fill blanks! is another project that helps you to fill out forms easily with conversational approach. You can always contact me to build your own personalized AI apps! (reza [dot] aminigougeh@mail [dot] mcgill.ca)

Latest News

Using HuggingFace without sharing your code!

Have you ever decided to breathe life into one of your Python projects? You may have heard of HuggingFace spaces – a great platform to showcase and share your machine[…]

Read moreImmerseGuard: Bridging Immersion and Environmental Awareness

In an era where our daily lives are constantly surrounded by technology, ensuring the harmony between digital immersion and environmental awareness has become more crucial than ever. Recent advancements in[…]

Read moreChit Chat Charm ^__^

“Talk” with AI … Dive deep into the world of AI-assisted conversations like you’ve never seen before. At Chit Chat Charm, we offer not just text-based interactions but a full-fledged[…]

Read moreContributions

The organizations I have contributed

OpenLab at UHN

Traumas cote-nord

Lady Davis Institute at JGH

Cyberpsychology Lab of Université du Québec at Outaouais (UQO)

DREAM BIG Lab

McGill University

And below is my IG I share my main hobby, photography, there!

Multisensory VR Experiences: My M.Sc. Journey

Our first paper: Review of systems that integrated VR with wearables for stroke rehabilitation!

Then we attempted to see what is the impact of multisensory VR on QoE subscales (e.g., immersion, realism, engagement):

QoMEX’ 2022: Multisensory Immersive Experiences: A Pilot Study on Subjective and Instrumental Human Influential Factors AssessmentMetroXRaine: Quantifying User Behaviour in Multisensory Immersive Experiences

Then we proposed a multisensory VR training paradigm! However, its papers are under review. We investigated its QoE aspects in a paper

submitted to the “quality and user experience” journal. Plus, the effects on MI-BCI performance are reported in a Frontiers journal! I hope they get accepted and published as soon as possible!Towards Instrumental Quality Assessment of Multisensory Immersive Experiences Using a Biosensor-Equipped Head-Mounted DisplayEnhancing Motor Imagery Efficacy Using Multisensory Virtual Reality Training

“Talk” with AI

Chit Chat Charm AI

Dive deep into the world of AI-assisted conversations like you’ve never seen before. At Chit Chat Charm, we offer not just text-based interactions but a full-fledged experience with visual and auditory immersion. Get ready to be charmed! Dive deep into the world of AI-assisted conversations like you’ve never seen before. At Chit Chat Charm, we offer not just text-based interactions but a full-fledged experience with visual and auditory immersion. Get ready to be charmed!

Webpage___Play the Game!___ KickstarterAbout me…

I’m Reza Amini Gougeh, an interdisciplinary engineer with a B.Sc. in Biomedical Engineering from the University of Tabriz, Iran, and an M.Sc in Telecommunication from INRS, Montreal, Quebec. Currently, I’m a Ph.D. student at McGill University.

I started programming at 16 with HTML and CSS, then moved to C and C++. My work focuses on Human-Computer Interaction (HCI), Natural Language Processing (NLP), Large Language Models (LLM), VR, and AI avatars. I develop intuitive interfaces, create immersive VR environments, and build AI avatars for mental health support. My expertise includes machine learning, data science, and physiological computing, aiming to advance healthcare globally.